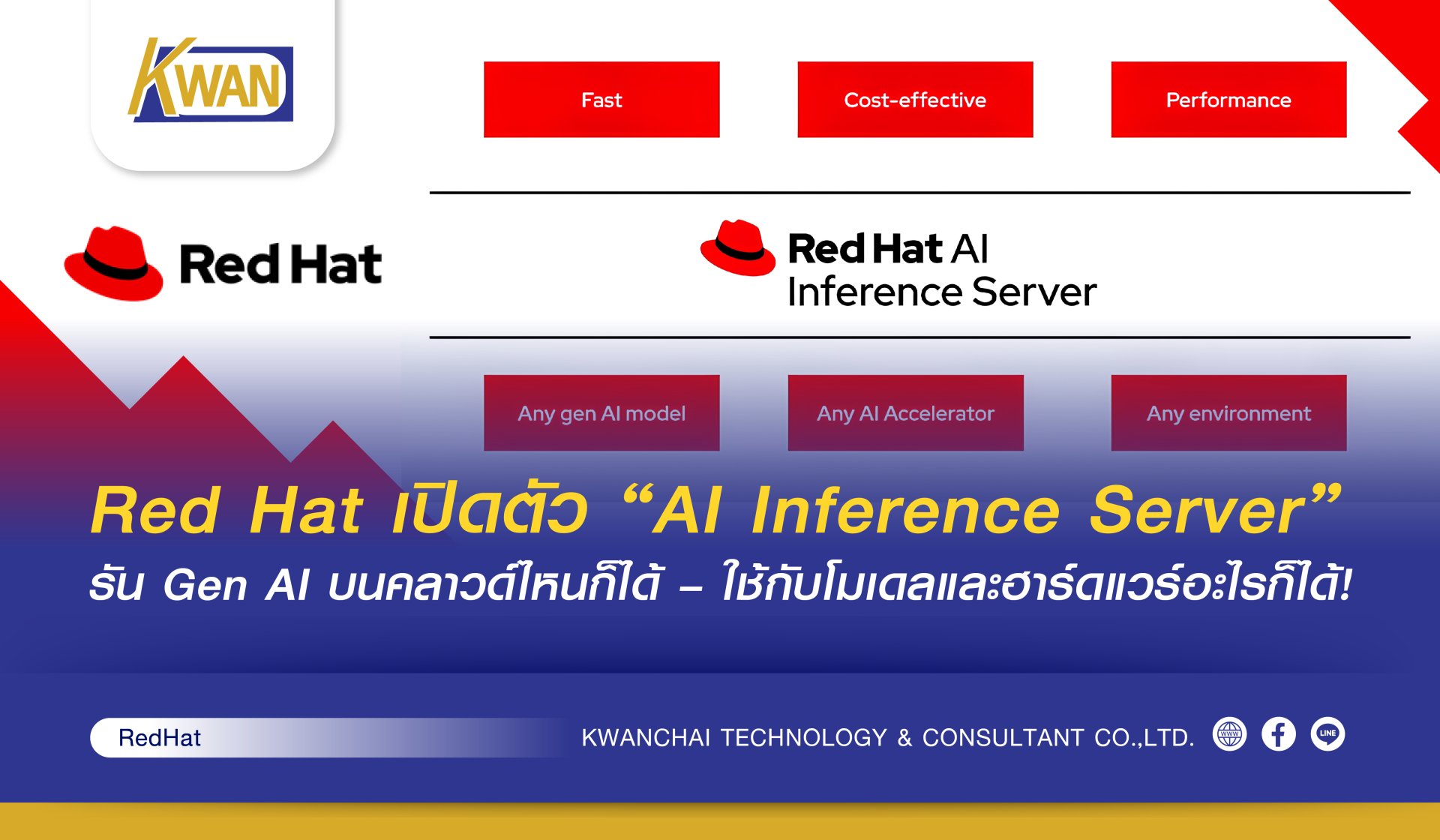

Red Hat Unveils “AI Inference Server” to Run Gen AI on Any Cloud – Use Any Model and Any Hardware!

Red Hat, the worlds leading provider of open source solutions, today announced Red Hat AI Inference Server, a major step forward in making generative AI (gen AI) available to anyone on any hybrid cloud. Delivered within Red Hat AI, this new solution is an enterprise-grade inference server built on the powerful vLLM community and enhanced with Red Hats Neural Magic technology, making it faster, more efficient, and more cost-effective to run on any gen AI model, on any AI accelerator, across any cloud environment. This breakthrough platform enables organizations to confidently deploy and scale gen AI into actionable insights, whether deployed as a standalone solution or as a component of Red Hat Enterprise Linux AI (RHEL AI) and Red Hat OpenShift AI.

Inference is a key AI tool, as pre-trained models translate data into real-world applications that are critical to user interactions that require fast and accurate responses. As gen AI models rapidly scale and become more complex, inference can become a major bottleneck, wasting hardware resources and resulting in inefficient responses, as well as increasing operational costs. Therefore, a robust inference server is no longer a luxury, but a necessity to unlock the full potential of AI at scale, and its significant simplification removes the underlying complexity.

Red Hat is specifically addressing these challenges with Red Hat AI Inference Server, an open inference solution designed to deliver high performance and built-in industry-leading model compression and optimization tools. This innovation empowers organizations to fully utilize the power of gen AI by delivering a much more responsive user experience, and gives users the freedom to choose their AI accelerators, models, and IT environments as they need them.

vLLM: Expanding Innovation in Inference

Red Hat AI Inference Server is built on the industry-leading vLLM project initiated at the University of California, Berkeley in mid-2023. This community project delivers high-throughput gen AI inference with support for large inputs, multi-GPU model acceleration, continuous batching, and more.

vLLM supports broad adoption of publicly available models, along with integrations with leading day zero models including DeepSeek, Gemma, Llama, Llama Nemotron, Mistral, Phi, and others, as well as open enterprise reasoning models such as Llama Nemotron. It is the accepted and commonly used standard for future AI inference innovations. As more and more leading model providers adopt vLLM, it plays a key role in the future of gen AI.

Red Hat AI Inference Server Launch

Red Hat AI Inference Server combines the leading innovations of vLLM and combines them with the enterprise capabilities of Red Hat AI Inference Server. Available as a standalone container, or as part of RHEL AI and Red Hat OpenShift AI, Red Hat AI Inference Server is available as a standalone container, or as part of RHEL AI and Red Hat OpenShift AI.

Red Hat AI Inference Server provides users with a robust, scalable vLLM distribution for any deployment environment, including:

· Intelligent LLM compression tools To reduce the size of the infrastructure and fine-tune the AI model, minimizing the computational effort while maintaining and increasing the model accuracy.

· Optimized model storage Hosted in the Red Hat AI organization on Hugging Face, it provides access to a collection of leading AI models that have been vetted and optimized, and are ready for inference, delivering 2-4x performance acceleration without compromising model accuracy.

· Red Hat Enterprise Support and decades of expertise in taking community projects from production to production.

· Third-party support To provide flexibility in deploying Red Hat AI Inference Server on non-Red Hat Linux and Kubernetes platforms, in line with Red Hat's third-party support policy.

Red Hat's Vision: Any Model, Any Accelerator, Any Cloud

The future of AI must be defined by limitless opportunity, not by infrastructure silos. Red Hat sees an approach where organizations can use any model, any accelerator, on any cloud, and deliver a consistent, superior user experience at a cost that is right for them. To unlock the true potential of their Gen AI investments, organizations need a universal inference platform that standardizes their work across high-performance AI innovations today and for years to come.

Red Hat is poised to lay the architectural foundation for the future of AI inference, just as the company pioneered the open enterprise by transforming Linux into the foundation of modern IT. The capabilities of vLLM are critical to building standards-based gen AI inference. Red Hat is committed to building a robust ecosystem around not only the vLLM community, but also llm-d for distributed inference. With a clear vision, no matter the AI model, underlying accelerator, or application environment, Red Hat is committed to making vLLM the definitive open standard for hybrid cloud inference.

RedHat / FAQ Co., Ltd. (PR)